Marvell announces new CPO architecture for custom AI accelerators

Leveraging its silicon photonics technology, the company says its XPUs with integrated co-packaged optics will enhance AI server performance by increasing XPU density from tens within a rack to hundreds across multiple racks

Marvell Technology, a company focusing on data infrastructure semiconductor solutions, has announced the advancement of its custom XPU architecture with co-packaged optics (CPO) technology. Building on its recently announced custom high-bandwidth memory (HBM) compute architecture, Marvell says it is now extending its custom silicon leadership by enabling customers to seamlessly integrate CPO into their next-generation custom XPUs and scale up the size of their AI servers from tens of XPUs within a rack currently using copper interconnects to hundreds across multiple racks using CPO, enhancing AI server performance. According to the company, the innovative architecture enables cloud hyperscalers to develop custom XPUs that achieve higher bandwidth density and deliver longer reach XPU-to-XPU connections within a single AI server – with optimal latency and power efficiency. The architecture is now available for Marvell customers' next-generation custom XPU designs.

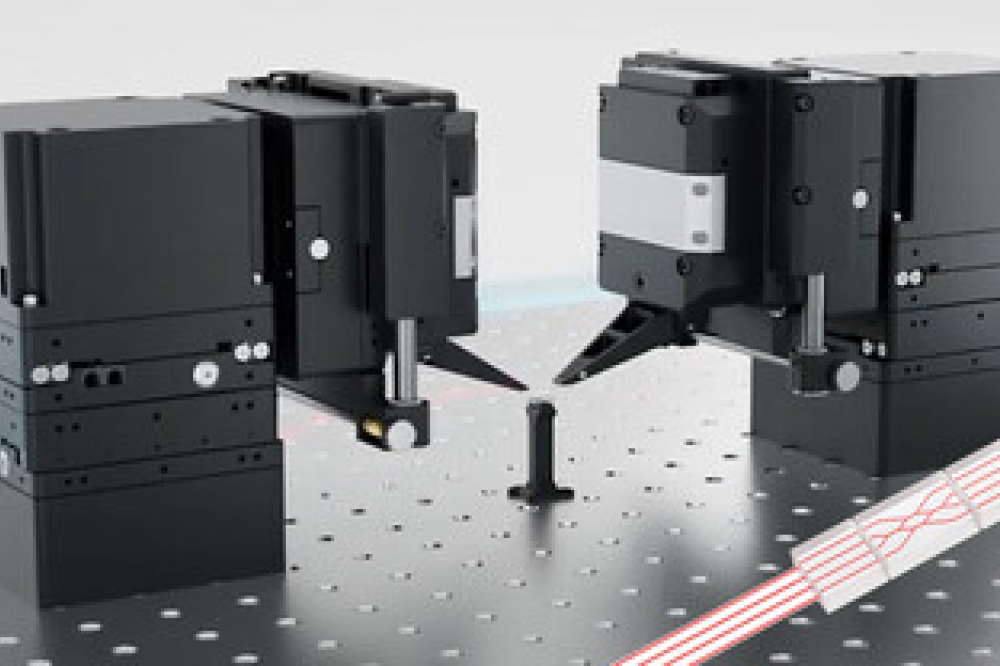

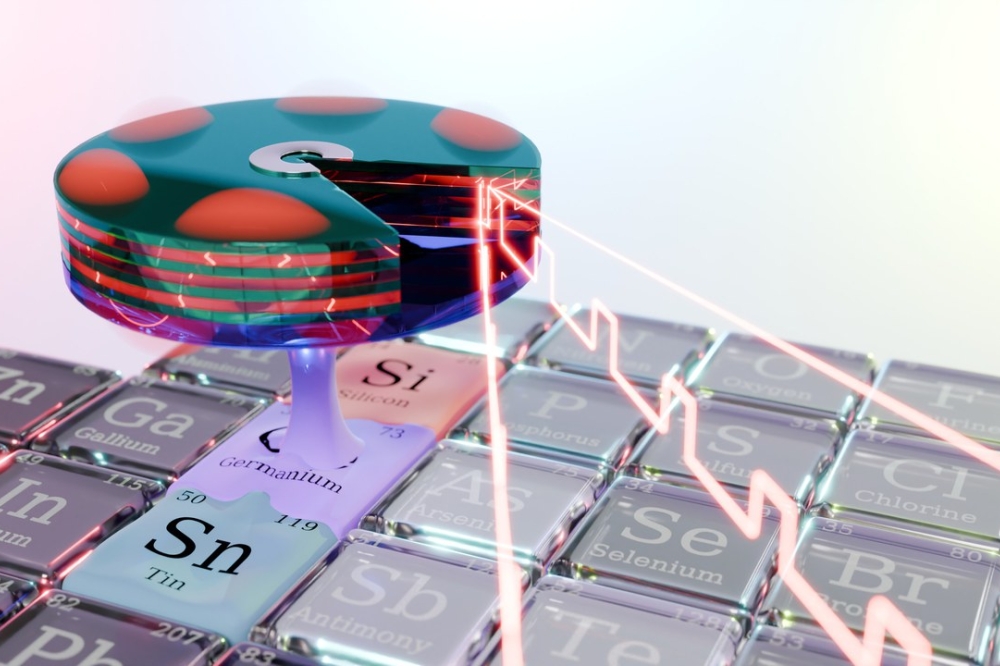

The Marvell custom AI accelerator architecture combines XPU compute silicon, HBM and other chiplets with Marvell 3D silicon photonics engines on the same substrate using high-speed SerDes, die-to-die interfaces and advanced packaging technologies. The company says this approach eliminates the need for electrical signals to leave the XPU package into copper cables or across a printed circuit board. With integrated optics, connections between XPUs can achieve faster data transfer rates and distances that are 100x longer than electrical cabling, enabling scale-up connectivity within AI servers that spans multiple racks with optimal latency and power dissipation, Marvell adds.

CPO technology integrates optical components directly within a single package, minimising the electrical path length. This close coupling significantly reduces signal loss, enhances high-speed signal integrity, and minimises latency. CPO enhances data throughput by leveraging high-bandwidth silicon photonics optical engines, which provide higher data transfer rates and are less susceptible to electromagnetic interference compared to traditional copper connections. This integration also improves power efficiency by reducing the need for high-power electrical drivers, repeaters and retimers. By enabling longer reach and higher density XPU-to-XPU connections, CPO technology facilitates the development of high-performance, high-capacity scale-up AI servers, optimising both compute performance and power consumption for next-generation accelerated infrastructure.

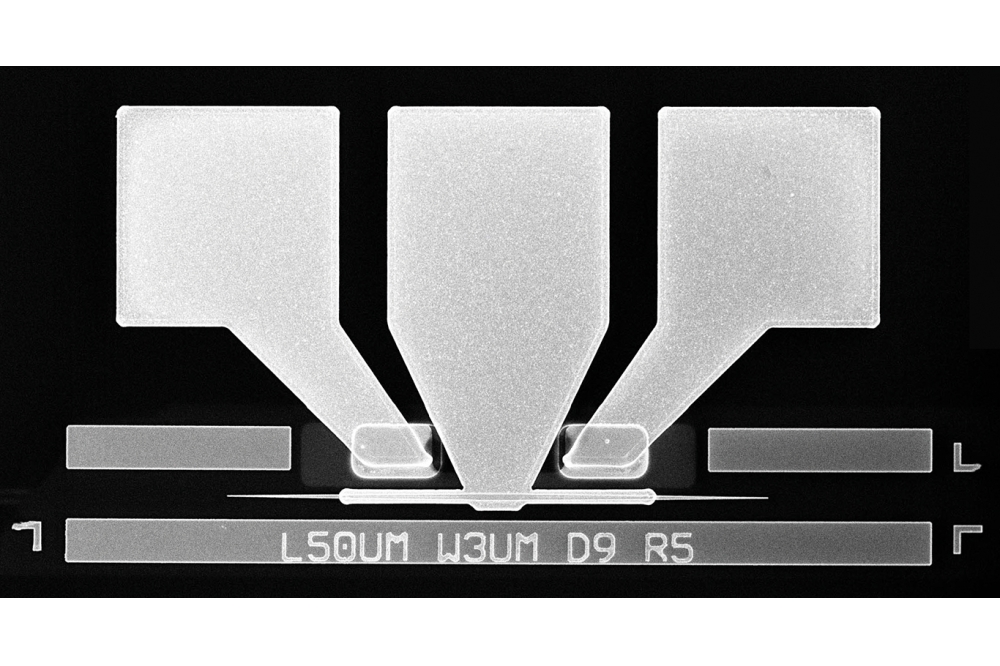

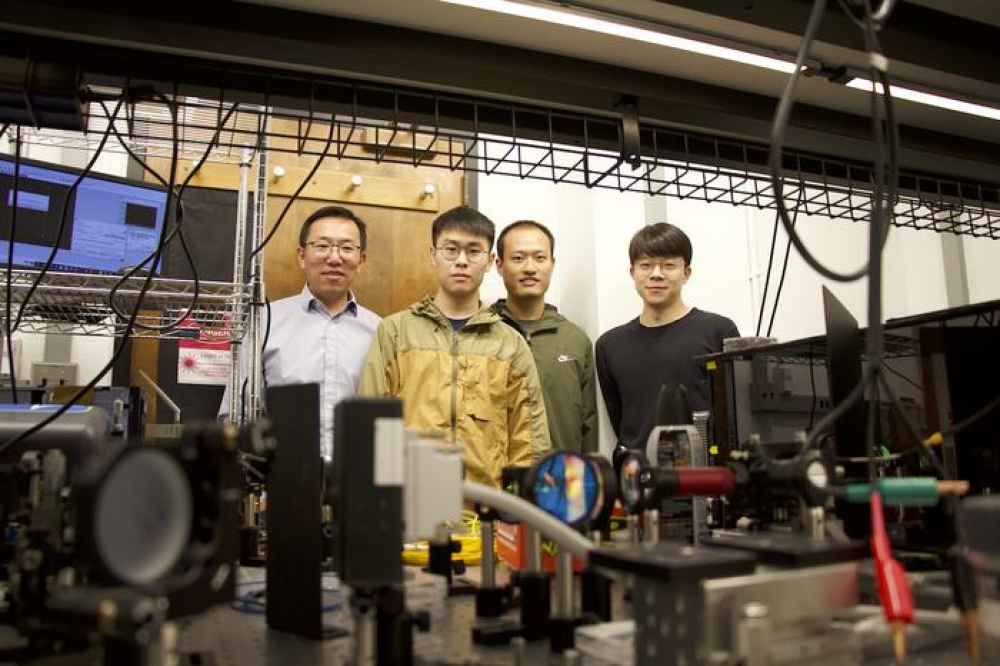

First demonstrated at OFC 2024, Marvell describes its 3D silicon photonics engine as an industry first, which supports 200G electrical and optical interfaces and is a fundamental building block for incorporating CPO into XPUs. The company says its 6.4T 3D silicon photonics engine is a highly integrated optical engine with 32 channels of 200G electrical and optical interfaces, hundreds of components such as modulators, photodetectors, modulator drivers, trans-impedance amplifiers, microcontrollers, and a host of other passive components in a single, unified device to deliver 2x the bandwidth, 2x the input/output bandwidth density, and 30 percent lower power per bit versus comparable devices with 100G electrical and optical interfaces.

“The Marvell custom AI accelerator with CPO architecture enables cloud hyperscalers to develop custom XPUs that will significantly increase the density and performance of their AI servers,” said Will Chu, senior vice president and general manager of the Custom, Compute and Storage Group at Marvell. “Integrating optics directly into XPUs takes custom accelerated infrastructure to the next level of scale and optimisation that hyperscalers must deliver to satisfy the growing demands of AI applications.”