The evolving role of optics in AI clusters

In the AI arms race between tech giants, there is more than one approach to using optics for efficient datahandling in datacentres and AI clusters.

By Vladimir G. Kozlov, Chief Analyst at LightCounting

Artificial intelligence will transform a wide range of industries over the next decade, the manufacturing of optical components and interconnects being one of them. It is also one of the few industries directly impacted by the boom in construction of AI clusters, whose effects alone will be enough to reshape the sector. Although novel technologies and numerous startups will have an opportunity to succeed, it will be up to the largest customers to select the winners. Decisions made by Google, Microsoft, and Nvidia will define the directions for innovation, and there may be more than one path forward in this arms race.

Google and Nvidia already have diverging priorities for optical connectivity and switching, while other large customers may find new ways to use optics for AI. All-optical signal processing continues to improve, but it is hard to compete with billions of transistors in a single chip. The most advanced PICs are limited to a few thousand devices. Yet, there may be another way to perform analogue processing of the optics.

Video and image recognition are among the most exciting applications of AI, and probably the most consequential in their capacity to compete with human cognition and transform our world. All of this starts with the optics. Our own biological vision systems can zero in on what matters, rather than flooding our brains with information. Similarly, LiDAR technologies already enable video recognition to focus on moving objects instead of a static background. Are there more tricks for the optics to do? The answer is yes, but it is hard to predict exactly what these will be.

Considering what we already know, Figure 1 shows LightCounting’s forecast for shipments of optical transceivers and optical circuit switches (OCS) for the next five years. Both charts show explosive growth, which may be interrupted by a slowdown at some point, but will likely resume soon after that. Currently, optical transceivers for AI clusters are shipping in tens of millions of units annually, and will reach close to 100 million units by 2029. Shipments of OCS reached 10,000 units in 2023 and will exceed 50,000 by 2029.

Figure 1. LightCounting’s forecast for shipments of optical transceivers and optical switches for AI clusters over the next five years. (Source: LightCounting)

AI cluster architectures

Google started using OCS in their compute nodes and AI clusters more than a decade ago. The company reported on the advantages of OCS-enabled architecture in multiple recent publications. Several other large AI cluster operators are now also starting to use OCS, and many more are seriously considering the benefits of following suit.

There is little doubt that demand for OCS will be strong and there may be more complex applications of optical switching in the future. Packet switching is problematic, since there are no practical solutions for optical buffers, but elephant flows can be routed optically. One can “hear” such flows coming from a distance and pre-provide a path for them.

Google was also the first to use optical transceivers in its datacentres back in 2007. Although the company’s adoption of this technology was briefly interrupted by the financial crises in 2008-2009, it resumed in full force in 2010. Many other cloud companies have followed Google’s approach over the last decade. Nvidia (Mellanox) preferred active optical cables (AOCs) until two years ago, but it became the largest consumer of 400G/800G transceivers in 2023.

Nvidia is currently using optical transceivers for Ethernet and InfiniBand connectivity between racks of servers and switches. The company also announced plans to use optics for NVLink connections two years ago and demonstrated it in one of their internally built clusters. NVLink connections require 9 times more bandwidth than InfiniBand, so reducing the cost and power consumption of the optics is a must to enable this new application.

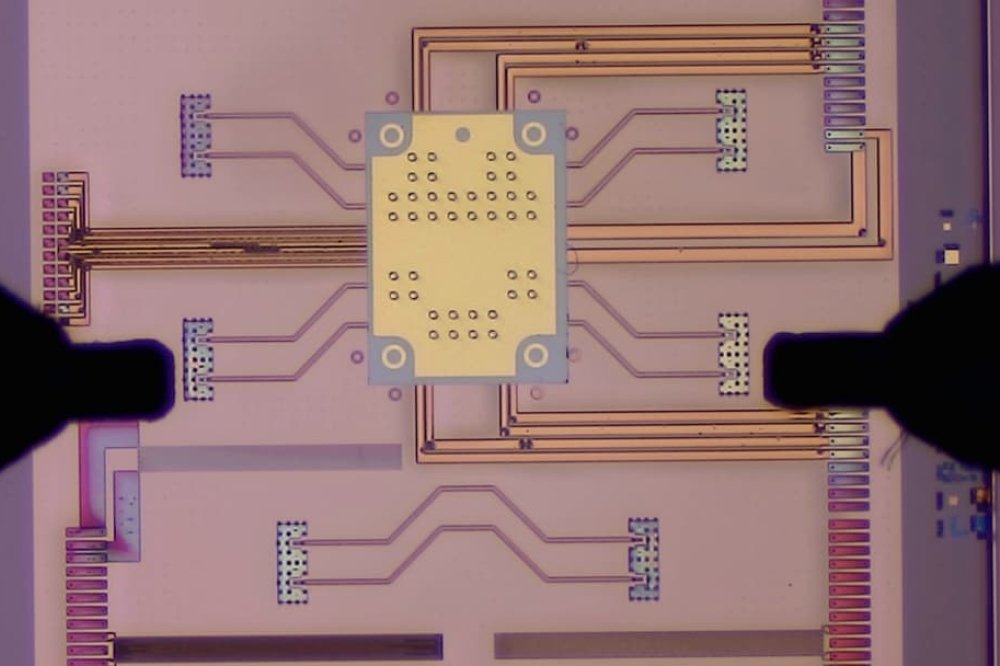

Figure 2.A comparison of the AI cluster architectures used by Google and Nvidia. (Source: Google)

Figure 2 compares the AI cluster architectures used by Google and Nvidia. TPU clusters developed by Google do not require Ethernet or InfiniBand switches, but use OCS. Each TPU can communicate directly with its six nearest neighbours, and OCS can scale and reconfigure these tightly knit networks. In contrast, Nvidia’s designs rely heavily on InfiniBand, Ethernet, and NVLink switches, requiring a lot more optical connectivity than Google’s designs.

These differences in AI cluster architectures lead to diverging priorities for optical interconnects at Google and Nvidia, as illustrated in Figure 3. Google’s use of OCS prioritises a higher link budget to offset the 1.5 dB optical loss of an OCS. Multi-wavelength FR4/FR8 transceivers increase the throughput of an OCS by a factor of 4 or 8, in comparison with DR4/DR8 modules.

On the other hand, Nvidia prioritises lower cost and power consumption to accommodate the larger quantities of transceivers required in its clusters, and is very supportive of linear-drive pluggable optics (LPO) and co-packaged optics (CPO) approaches. Google has no interest in LPO or CPO, since its designs will continue to use only 1.5 transceivers per TPU on average, whereas Nvidia may need up to 10 transceivers per GPU in the future to support NVLink over fibre.

For this reason, we expect that Nvidia will deploy LPO and/or CPO in the next 2-3 years to reduce power consumption from 10-15 pJ/bit to 4-7 pJ/bit, enabling NVLink over fibre, as illustrated in Figure 4. Google is already using optics for inter-core interconnects (ICI) between TPUs. Further improvements in the power efficiency of optical devices are needed to enable optical connections to off-package memory, also shown in Figure 4.

Figure 3. Key requirements for the optics at Google and Nvidia. (Source: LightCounting)

Challenges in scaling performance

The reliability of all components inside an AI cluster is becoming critical for scaling up these systems; a single failed GPU or network link can reduce the efficiency of the whole cluster by 40 percent, and mitigating failures (via software) could take up to 10 minutes. Such failures occur every 30-45 minutes on average. This problem will only get worse for larger clusters based on more complex GPUs and optics.

Figure 5 shows data on transceiver failure analysis for 200G FR4 and 400G FR4 modules. Directly modulated laser degradation was the main source of failures in 200G modules. Degradation in the performance of an externally modulated laser used in 400G transceivers was less of an issue than general manufacturing issues related to printed circuit board assembly and wire bonds. Adopting more integrated wafer-scale design and manufacturing is essential for improving the reliability of the optics.

Figure 4. Applications of copper and optical interconnects. (Source: LightCounting)

GPU performance is expected to improve substantially by the end of this decade, through a combination of advances in CMOS, substrate and packaging methods, chip architecture, and better cooling techniques. Managing heat dissipation from these very large chip assemblies is one of many problems, so a roadmap for improvements in power efficiency is key for all technologies used in AI clusters. CMOS does have a roadmap from 5 nm to 3 nm and 2 nm within the next five years, but optical interconnects are still searching for a path to higher power efficiency.

Figure 5. Transceiver failure analysis for 200G FR4 and 400G FR4 modules. (Source: Meta)

LightCounting expects that linear-drive optics will be deployed in volume over the next five years, either as pluggable transceivers (LPO or linear receive optics) or as CPO. The industry will need new materials and devices to achieve further gains in power efficiency. Time to market may be as long as a decade for some of the novel technologies, but a few of these will be adopted within the next five years. It is worth bearing in mind that this is an arms race; there will be customers who are willing to take bigger risks.