Optical neural network demonstrated on chip

A team of scientists has reported an adaptive neural network comprising thousands of artificial photonic neurons on a single chip. The researchers say this could pave the way for more efficient computing for AI applications in future.

Modern computer models, such as powerful AI models, push traditional digital computer processes to their limits. New types of computing architecture, which emulate the working principles of biological neural networks, hold the promise of faster, more energy-efficient data processing.

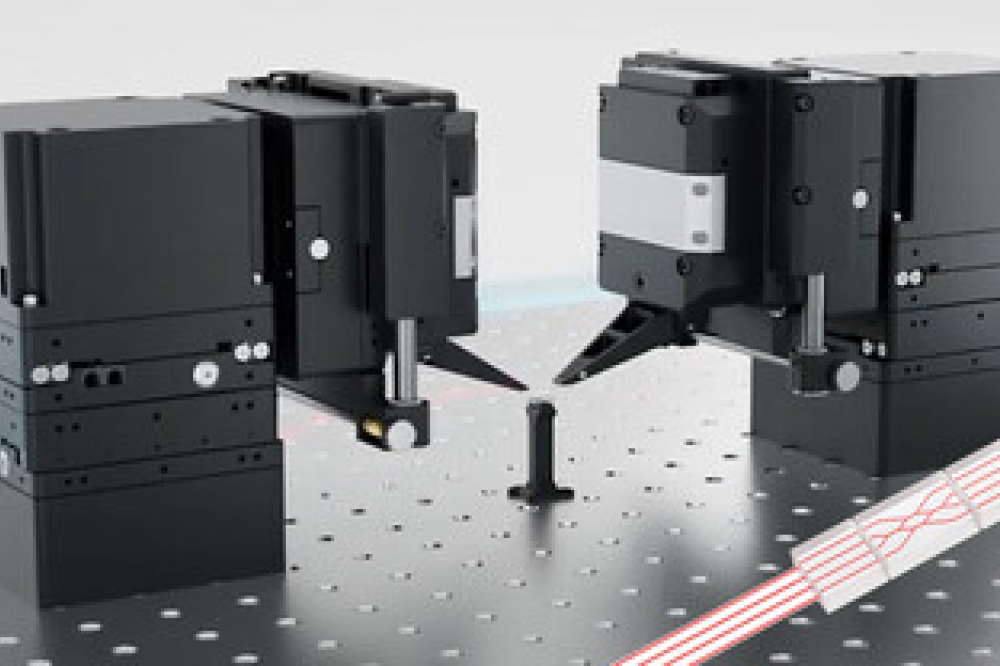

Pursuing this possibility, the researchers developed a so-called event-based architecture, using photonic processors with which data is transported and processed by means of light. In a similar way to the brain, this enables the continuous adaptation of the connections within the neural network. These changeable connections are the basis for learning processes.

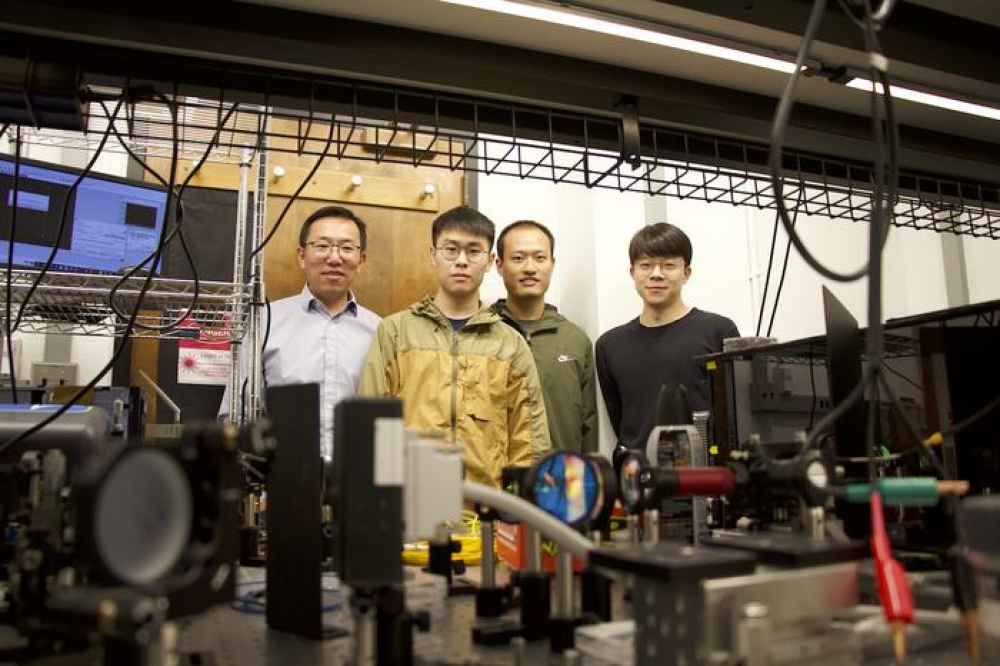

For the purposes of the study, a team working at Collaborative Research Centre 1459 (“Intelligent Matter”) – headed by physicists Prof. Wolfram Pernice and Prof. Martin Salinga and computer specialist Prof. Benjamin Risse, all from the University of Münster – joined forces with researchers from the University of Exeter and the University of Oxford. The study has been published in the journal “Science Advances”.

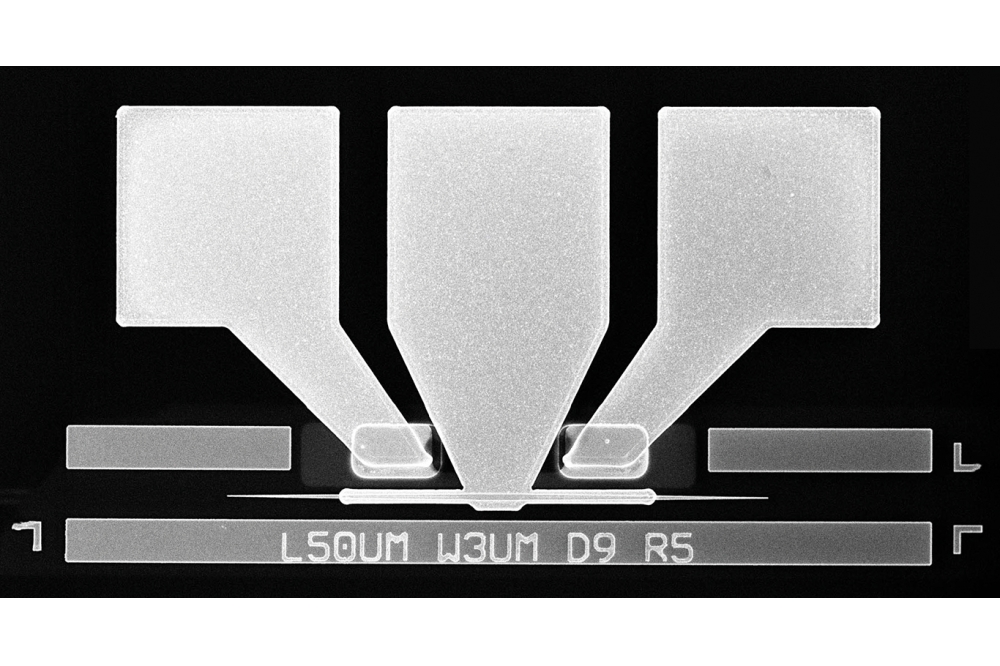

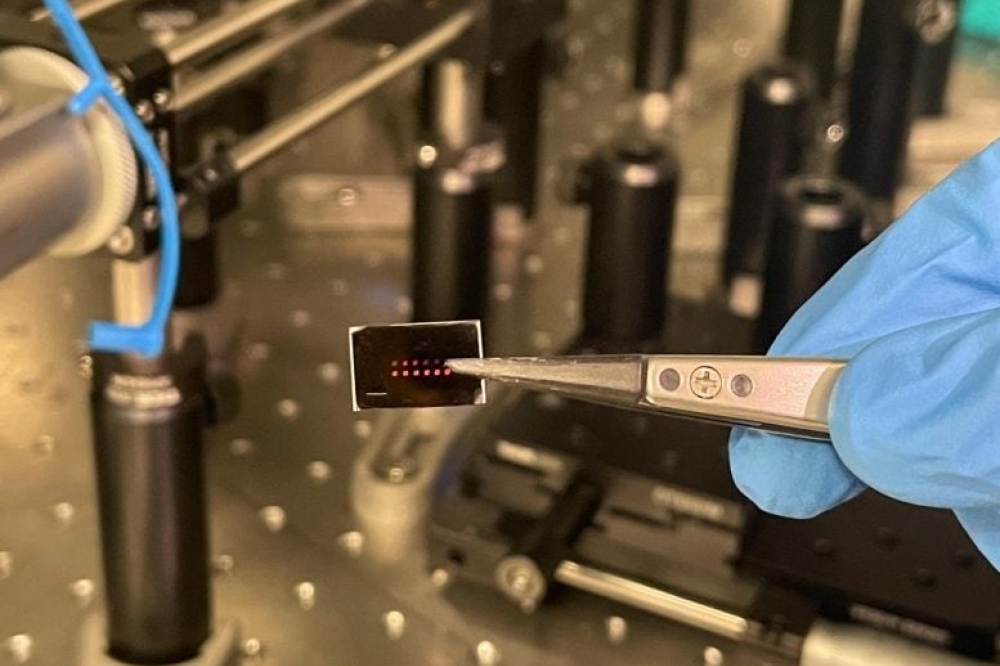

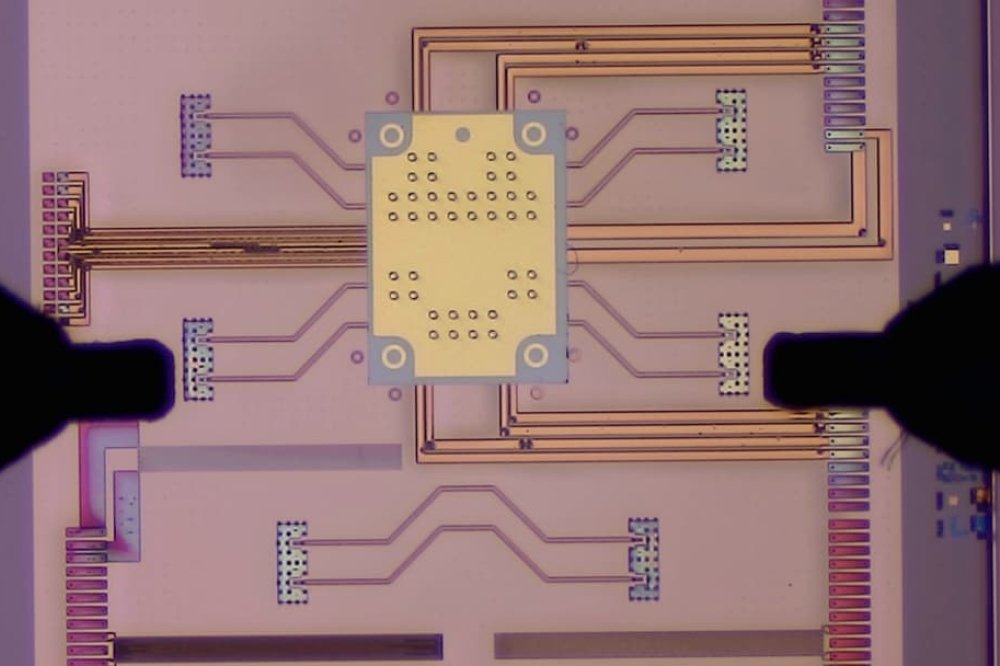

What is needed for a neural network in machine learning are artificial neurons which are activated by external excitatory signals, and which have connections to other neurons. The connections between these artificial neurons are called synapses – in reference to the biological inspiration. For their study, the researchers in Münster used a network consisting of almost 8,400 optical neurons made of waveguide-coupled phase-change material, which can be switched between an amorphous structure and a crystalline structure with a highly ordered atomic lattice.

The team showed that the connection between each pair of these neurons can indeed become stronger or weaker (synaptic plasticity), and that new connections can be formed, or existing ones eliminated (structural plasticity). In contrast to other similar studies, the synapses were not hardware elements but were coded by the properties of the optical pulses – in other words, as a result of the respective wavelength and intensity of the optical pulse. This made it possible to integrate several thousand neurons on one single chip and connect them optically.

In comparison with traditional electronic processors, light-based processors offer a significantly higher bandwidth, making it possible to carry out complex computing tasks, and with lower energy consumption. “Our aim is to develop an optical computing architecture which in the long term will make it possible to compute AI applications in a rapid and energy-efficient way,” says Frank Brückerhoff-Plückelmann, one of the lead authors of the study.