Distributed quantum computing with an Entanglement First architecture

Photonic Inc. is harnessing silicon photonics to build a high-connectivity, modular system that solves the scalability challenge in quantum computing and paves the way for its use in commercially relevant applications.

By Kevin Morse, Senior Technical Product Manager, Photonic

To realise the full potential of quantum computing in commercially relevant applications, including materials science, drug discovery, climate change, and security, we will need to build large-scale quantum computers. However, there is a well-known obstacle in scaling quantum technology: the difficulty of connecting individual systems to get beyond a single unit and perform distributed quantum computing across modules.

This feat demands the efficient creation and distribution of high-quality entanglement – a property that links two qubits (quantum bits) such that what affects one affects the other instantaneously, regardless of the distance between them. At a high level, we can think of entanglement as a critical resource for information transfer and operations in quantum computers. Any high-performance quantum computing system must therefore be able to efficiently generate, distribute, and consume entanglement.

There are two broad categories of techniques that quantum computers use to generate entanglement: direct coupling (proximity-based) and mediated interaction (for example via photons). In the former, the qubits are physically close enough to connect to one another. In photon-based mediated interaction approaches, photons interfere with one another and entangle the qubits, while the qubits themselves remain physically separated.

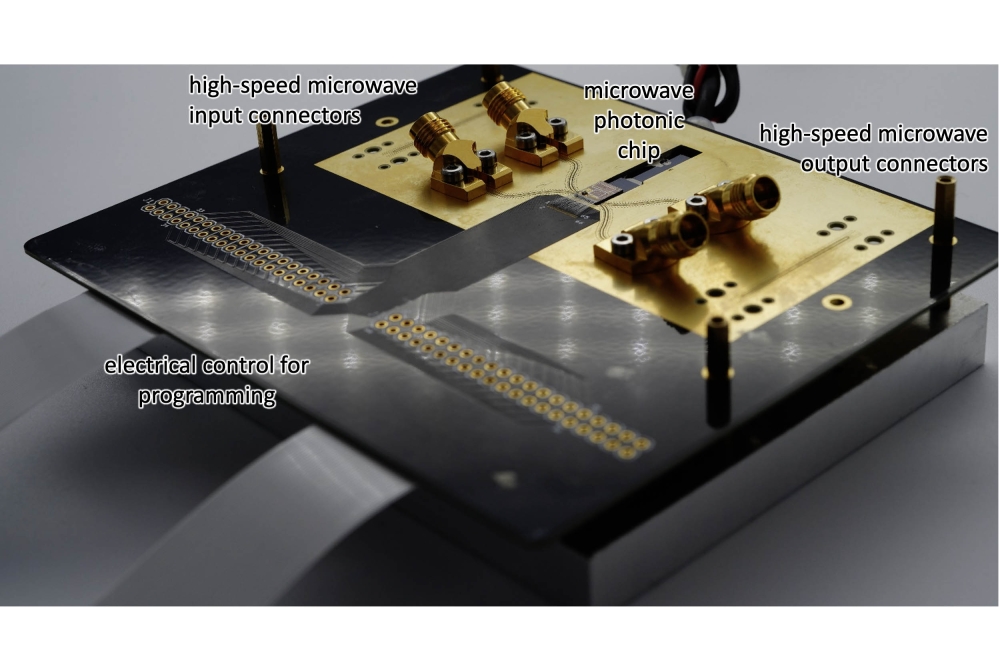

Figure 1. Entanglement First Architecture Overview: The architecture

consists of silicon T centres embedded in optical cavities, photonic

switches, and single-photon detectors, all cooled in a 1 K cryostat.

These components are connected via telecom fibre to room-temperature

photonic switch networks and control electronics. The setup allows for

non-local connectivity, enabling both the expansion of computing power

and the creation of long-distance quantum networks. The system is

designed for integration into standard datacentre setups.

At Photonic Inc., we have developed a photon interference-based Entanglement First architecture, so named because it prioritises this key resource. Rather than using photons as the qubits, as some other companies do, we instead use colour centres in silicon as the qubit platform, while photons serve as the interconnects between them.

In Photonic’s technology, a qubit emits telecom-wavelength photons that are spin-entangled, meaning that their quantum state is directly linked to a property of the qubit called its spin state. This entanglement is crucial for performing quantum operations both within and across modules, as well as for distributing entanglement across the network, and becomes increasingly complex as systems scale.

Telecom photons can enable high-connectivity links between systems, but they only do so efficiently if the system has been designed to interface with optical fibre without the need for signal frequency conversion between electrical and optical ranges (transduction). This is what Photonic’s Entanglement First architecture is built to do, paving the way for large-scale quantum computing and networking [1].

From the outset, we designed this approach to scale up, with continual increases in the quantity and density of components within a single unit, and to scale out, by adding units to a network.

Drawing inspiration from classical cloud computing, where networking has enabled massive compute power, Photonic focuses on integrating intermodular operations, a unique challenge for quantum technologies.

To do this, we combine three powerful technologies.

First, we use a high-performing qubit platform carefully chosen to support scalability. Our low-cost, high-density silicon spin qubits have an optical interface to facilitate communication through photons. They also have long coherence times, meaning they can maintain their quantum states for a long time, an essential capability for commercial applications.

Second, we harness a switched optical architecture that incorporates silicon photonics and telecom fibre to enable on-demand entanglement both within and between modules. This feature makes for a high-connectivity system to facilitate distributed computing.

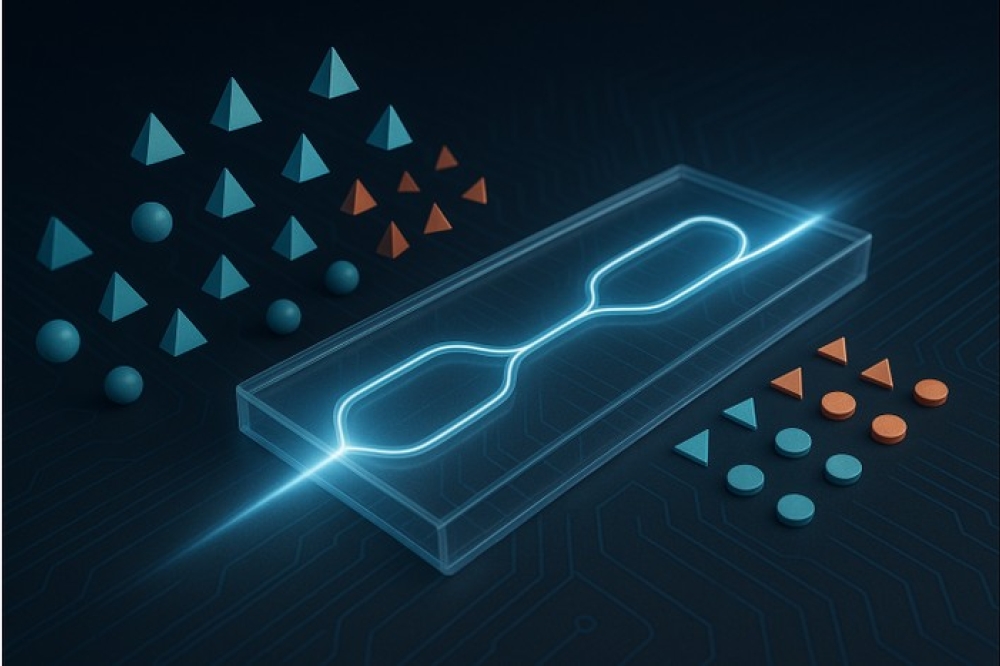

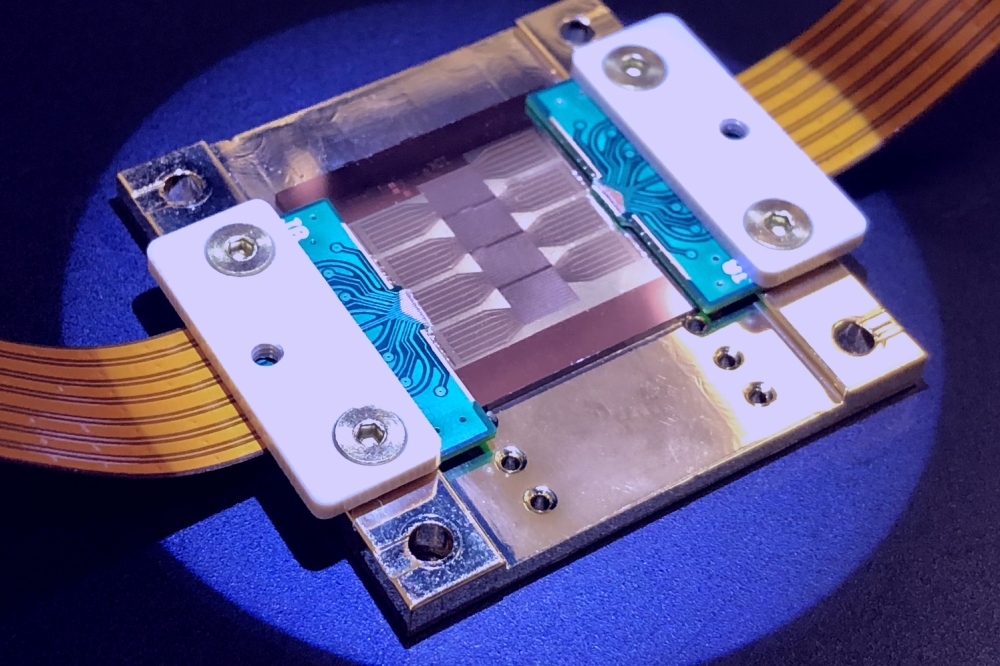

Figure 2. Photonic’s qubit platform, the T centre in silicon

Such high connectivity also supports a specific technique for correcting computational errors called quantum low-density parity check (QLDPC) codes. Compared with other conventional methods, these codes are fast and efficient at addressing any errors that occur in the quantum computing operations, with a known instance requiring up to 20 times fewer qubits for this task.

This combination makes it possible to distribute and consume entanglement efficiently using any-to-any connectivity with telecom photons between qubits. This core capability puts Photonic’s quantum system in prime position to reach commercial utility and datacentre deployment.

High-performance qubit platform

The basis of our high-performance qubit platform is the T centre in silicon, which occurs when a silicon atom is replaced with two carbon atoms, one hydrogen atom, and a free electron. These particles act as spin qubits – encoding information in their spin states and effectively serving as the compute and memory of the quantum processor.

A relatively new qubit modality, the T centre is Photonic’s platform of choice due to its favourable properties for generating and distributing entanglement. First, while quantum states are inherently fragile, T centres have long coherence times, meaning they can maintain their quantum state over extended periods. This is crucial for reliable quantum operations. Second, T centres emit telecom-wavelength photons, which are compatible with existing fibre-optic communication infrastructure, facilitating long-distance quantum communication. Finally, T centres are naturally compatible with silicon PICs, allowing for scalable fabrication using existing manufacturing techniques.

The telecom photons that are the primary carriers of quantum information are also foundational to Photonic’s architecture. Emitted by the T centres, these photons establish entanglement between distant qubits connected by telecom fibres. The photons’ inherent telecom wavelength is particularly advantageous because it does not require conversion into another form to travel. It also minimises loss in optical fibres, allowing for long-distance communication and any-to-any connectivity.

Photonic’s system creates entanglement through methods such as the Barrett-Kok protocol [2]. This involves using an optical pulse to trigger two physically distant T centres to emit synchronised photons, each one spin-entangled with the T centre that emitted it. The two photons are then interfered on a beam splitter and directed to single-photon detectors.

If the photons are indistinguishable – meaning they are so similar it is not possible to determine which photon originated from which T centre – the entanglement will be detected when they exit the same branch of the beam splitter at the same time. The two entangled qubits are now what is called a Bell pair. This means that they have a shared state and can no longer be considered independent of each other.

Such state sharing is required before quantum information can be transferred between qubits using teleportation protocols. Last year, Photonic was the first to demonstrate distributed computing using entangled qubits in separate commercial systems using this approach [3].

As well as computation and communication of data, the computing system needs quantum memory. This is also fulfilled by the T centre spin qubits, which can hold a quantum state in memory and continue to emit photons until entanglement is successful. This protects the system’s operation from errors if a photon is lost.

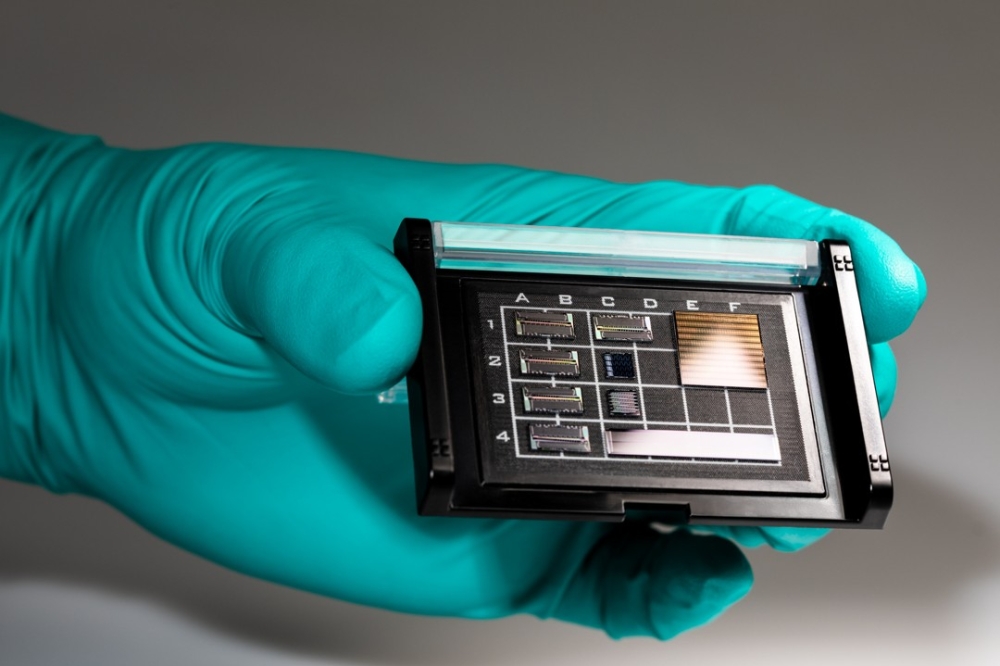

Figure 3. Photonic uses an interference-based approach to generate entanglement.

High-connectivity architecture

The T centre is rare among qubit platforms because of its direct telecom interface; it can interact efficiently with a pump laser pulse and emit a spin-entangled O-band photon. In our architecture, the T centre is embedded in a photonic cavity, which both isolates it from noise and enhances its emission rate of photons in the desired optical modes. The cavities are coupled to optical waveguides with well-defined modes so that spin-entangled photons can be accurately (and with low loss) routed to their destination, either through integrated photonic waveguides or by coupling into optical fibre. Optical fibres can then connect T centres on a single chip or across multiple chips, enabling an inherently modular and horizontally scalable design.

The use of integrated photonics is one of the most innovative aspects of this architecture, reducing losses and improving the overall performance of the quantum system. The optical cavities and photonic waveguides containing the T centres are fabricated on a silicon-on-insulator (SOI) platform, maximising photon collection efficiency and boosting the photon emission rate and quality through photonic engineering. Additionally, the SOI platform allows for high-density integration of qubits and photonic components, facilitating the creation of large-scale quantum systems.

Among the essential integrated components are on-chip photonic switches, which enable the routing of photons between different T centres and other photonic components. They operate by dynamically changing the path of the photons based on control signals from room-temperature electronics.

Integrated switches reduce the overall loss and latency in the system by processing photons within the chip, minimising the number of interfaces and potential points of loss they encounter. The switches are designed to handle the high bandwidth and low loss requirements of telecom photons, ensuring that the entanglement distribution is efficient and reliable.

Single-photon detectors are another key integrated component, collecting individual photons and measuring their quantum state on-chip. Upon detecting a photon, these detectors generate a signal to confirm its transmission and reception, heralding successful entanglement events.

Specifically, Photonic’s architecture employs superconducting nanowire single-photon detectors (SNSPDs), which are known for their high efficiency and low dark count rates; they catch a very high percentage of photons but rarely generate a false signal when a photon is not present. These detectors can detect single photons with high fidelity, ensuring an accurate and reliable entanglement distribution process.

The on-chip integration of SNSPDs further enhances the system’s performance by reducing the overall loss and improving detection efficiency.

Besides the integrated photonics, the architecture also employs off-chip, room-temperature photonic switch networks and control electronics. These are connected to the cryogenic quantum modules via telecom fibre. This setup allows for nonlocal connectivity, enabling both the expansion of computing power and the creation of long-distance quantum networks. Room-temperature components also increase the efficiency by reducing the energy and cooling requirements of the system.

A major advantage of Photonic’s system is its modularity, made possible through high connectivity. In the Entanglement First architecture, each T centre is connected by an optical fibre to a switch, allowing for flexible and arbitrary routing. The system works the same way whether it is connecting qubits within systems or across modules. Instead of requiring ever-larger monolithic quantum supercomputers to increase the size of a system, this architecture can directly link multiple quantum modules across telecom fibres.

Other approaches that do not use telecom wavelength photon interference to generate entanglement require additional steps to transform to a telecom wavelength to get between modules, and then to transform back to perform operations. Photonic’s approach to modularity not only simplifies scaling for quantum computers but also applies to scalable quantum networks.

Scaling fault-tolerant systems

There is still another factor to consider for building practical, large-scale quantum computers: fault tolerance. The quantum effects that underpin quantum technologies occur at a tiny, often subatomic scale, making them particularly susceptible to noise from their environment. Disruptions can lead to the loss of entangled or quantum states and to operational imperfections, resulting in computational errors.

Quantum computing systems usually handle this problem by forming logical qubits – groups of individual physical qubits that work together using error-correcting codes to detect and fix errors during computation. While individual qubits are notoriously unstable, groups are much more resilient to noise, enabling the system to complete operations and run algorithms reliably.

Error-correction codes are therefore essential for achieving a fault-tolerant system, but there is a wide range of different codes, some of which require more physical qubits per logical qubit than others. A high-connectivity architecture offers a further benefit here: it allows more flexibility in the codes that can be used. Certain types of codes, called quantum low-density parity check (QLDPC) codes, require comparatively few physical qubits per logical qubit.

This means that a smaller system using QLDPC can achieve the same computational capacity as a larger system running a less efficient type of error-correction code. In February, Photonic introduced a new QLDPC code family, SHYPS [4], which is the first to unlock logic in this type of code [5]. This family requires up to 20 times fewer physical qubits per logical qubit than other conventional techniques.

However, QLDPC codes require a high-connectivity architecture; they are not universally implementable but are well suited to our Entanglement First system.

By combining the high-performance physical architecture with these exceptionally efficient error-correction codes, Photonic is realising the true potential of large-scale, distributed, fault-tolerant systems for commercially relevant quantum computing applications. The Entanglement First system is designed to scale up and scale out, by optimising distributed computation with high connectivity and integrating key components on-chip.

The benefits of this approach are not just limited to quantum computers.

The key characteristics of the architecture also meet the requirements for quantum repeaters for long-distance communication of quantum information and scalable quantum networks.

Photonic is defining the shortest path to accessible quantum services based on scalable quantum systems, accelerating the timelines to achieving the benefits of quantum technology.