An optical neural network for computer vision

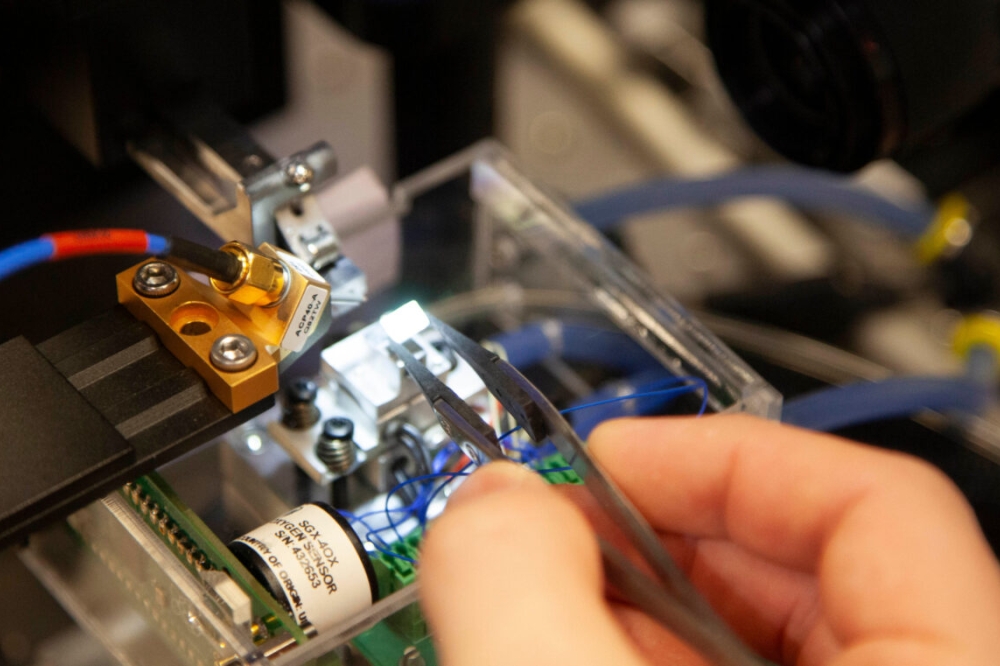

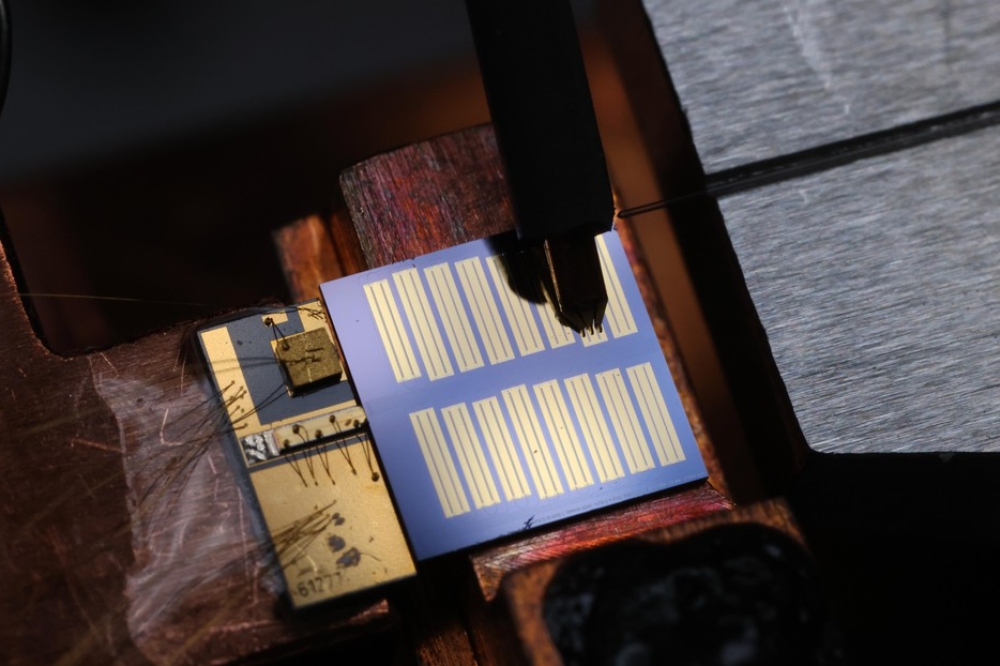

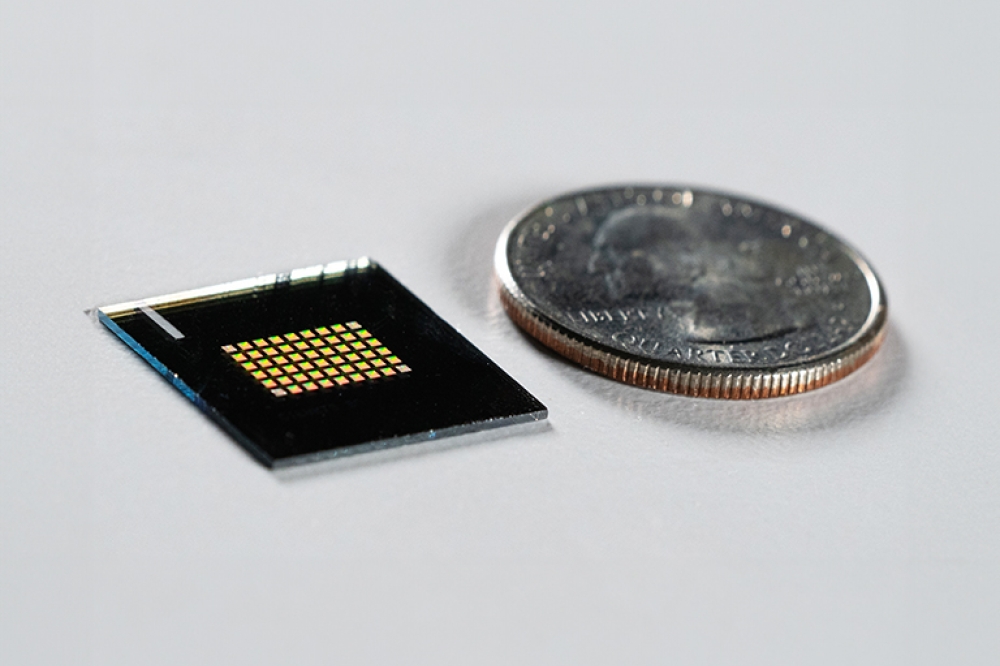

By combining a nanophotonic neural network with miniaturised camera optics, researchers have developed a device they say can capture clear images and identify objects at the speed of light, representing a new approach to the field of computer vision. Photo by Ilya Chugunov, courtesy of Princeton University

Researchers have reported developing a new kind of compact camera engineered for computer vision – a type of AI that allows computers to recognise objects in images and video. Describing their work in a paper published in Science Advances, the team say that their research prototype uses optics for computing, significantly reducing power consumption and enabling the camera to identify objects at the speed of light.

Arka Majumdar, a professor in electrical and computer engineering and physics at the University of Washington, and Felix Heide, an assistant professor of computer science at Princeton University, who led the research, have a longstanding collaboration. They and their students have previously developed a camera shrunk down to the size of a grain of salt that is still capable of capturing clear images. The new study builds on that work, finding an application for compact cameras in computer vision.

“This is a completely new way of thinking about optics, which is very different from traditional optics,” said Majumdar. “It’s end-to-end design, where the optics are designed in conjunction with the computational block. Here, we replaced the camera lens with engineered optics, which allows us to put a lot of the computation into the optics.”

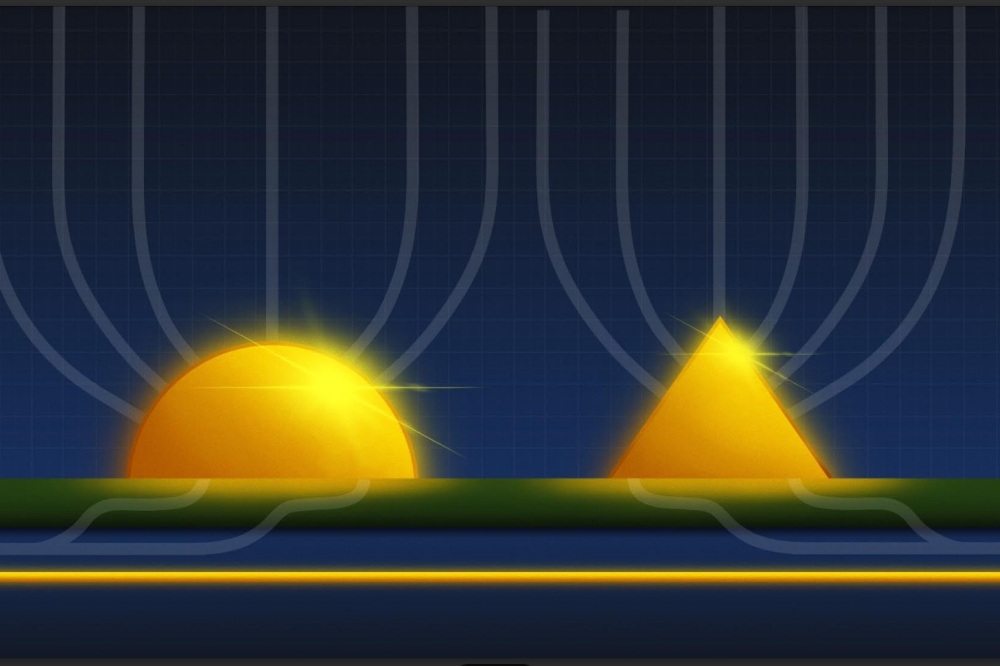

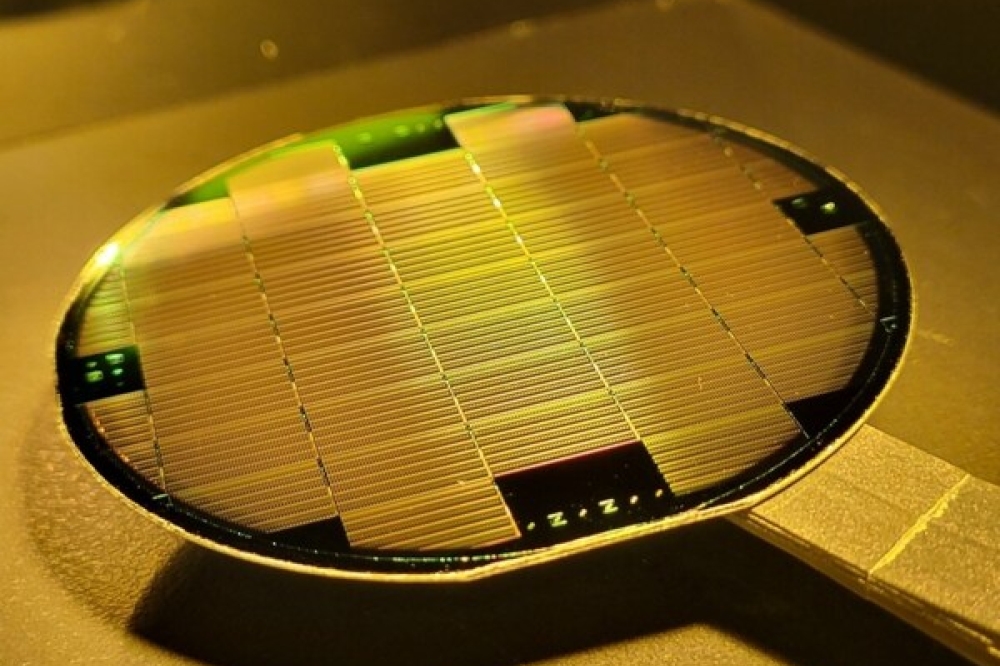

Instead of using a traditional camera lens made of glass or plastic, the optics in the scientists’ camera relies on layers of 50 meta-lenses – flat, lightweight optical components that use microscopic nanostructures to manipulate light. The meta-lenses also function as an optical neural network – a form of AI modelled on the human brain.

According to the scientists, this unique approach means that much of the computation takes place at the speed of light, enabling the system to identify and classify images more than 200 times faster than neural networks that use conventional computer hardware, and with comparable accuracy. Additionally, since the optics in the camera rely on incoming light to operate, rather than electricity, the power consumption is greatly reduced, the researchers say.

“Our idea was to use some of the work that Arka pioneered on metasurfaces to bring some of those computations that are traditionally done electronically into the optics at the speed of light,” Heide said. “By doing so, we produced a new computer vision system that performs a lot of the computation optically.”

Majumdar and Heide intend to continue their collaboration, with next steps including further iterations, evolving the prototype so it is more relevant for autonomous navigation in self-driving vehicles – a promising application area for the technology. They also plan to work with more complex data sets and problems that take greater computing power to solve, such as object detection (locating specific objects within an image), which is an important feature for computer vision.

“Right now, this optical computing system is a research prototype, and it works for one particular application,” Majumdar said. “However, we see it eventually becoming broadly applicable to many technologies. That, of course, remains to be seen, but here, we demonstrated the first step. And it is a big step forward compared to all other existing optical implementations of neural networks.”